Empowering Accessibility with AI: 5 Real-World Use Cases

5 minute read

As AI becomes an ever-increasing presence in our lives, what role can it play in breaking down barriers to access, and fostering a more inclusive world?

Artificial Intelligence (AI) is an ever-expanding force that is an increasing presence in our lives. Its potential to dismantle global barriers for people with disabilities is immense. But what specific roles can AI play in fostering inclusion and driving improved accessibility? In this article, we’ll explore some of the key opportunities AI offers and where it’s actively making a difference in both the physical and digital realms.

AI-Driven Automatic Content Descriptions

When discussing AI and accessibility, an increasing amount of focus is centred on the technologies’ ability to describe visual content. These generated descriptions primarily relate to alternative text for images, but they also extend to videos and other non-textual content. The goal is to make these materials more accessible to visually impaired users or anyone who disables images due to low bandwidth.

While AI-generated descriptions are becoming increasingly detailed, there’s a crucial aspect to consider: context. Often, these descriptions lack contextual information, leaving users without a clear understanding of how the image relates to the surrounding content or page.

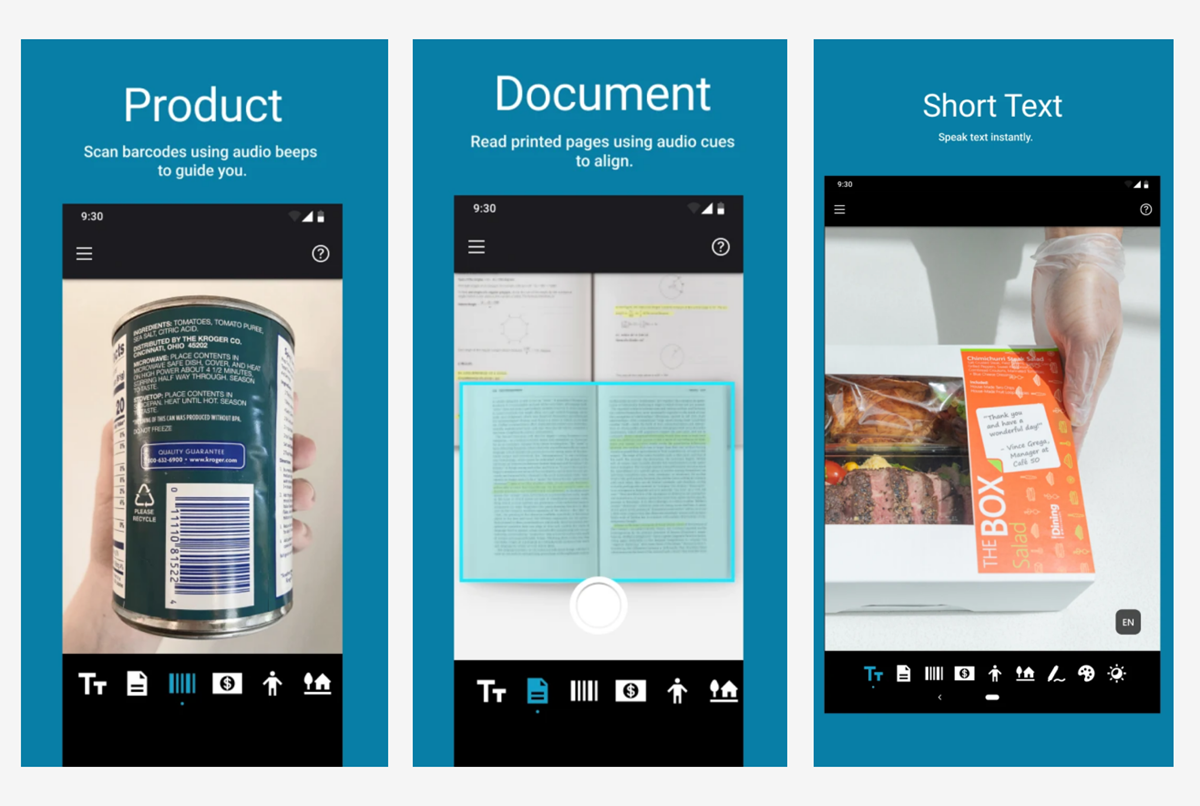

Several innovative apps and services are starting to address this need. One standout example is Microsoft’s Seeing AI. Designed for visually impaired individuals, this app transforms the visual world into an audible experience. By using their phone camera, users can capture images and receive accurate spoken descriptions. Whether it’s postal letters, signs, documents, or even lunch instructions, Seeing AI empowers people to navigate life more effectively. Another noteworthy tool is Be My AI which excels at providing descriptions for complex data, including charts. As an additional method of assisting users, both these apps also cleverly use audio cues to guide users on how to position their phone camera to capture the best shot.

Three images show use cases for Microsoft's Seeing AI Application, including scanning product barcodes, read printed pages using audio cues to align, and reading short text.

Real-Time Captions and Transcriptions

Captioning video content is a critical aspect of accessibility. While it’s essential for those who are D/deaf or have hearing impairments, we all encounter situations where captions enhance our understanding. Whether the video is in a foreign language, we’re in a noisy environment, or we’re late-night “doom scrolling,” captions provide clarity. Importantly, this need extends beyond pre-recorded content to live scenarios, such as daily meetings or video calls.

Typing live captions during conversations is a skill, and ensuring consistent availability for every meeting is nearly impossible. Historically, AI-driven solutions struggled with accuracy, often introducing confusion rather than improving access.

AI-powered tools now offer real-time captions and transcriptions for audio and video content. Microsoft Teams, for instance, provides these services during video calls and meetings. Live captions empower participants with hearing difficulties, allowing them to engage fully. Additionally, features like PowerPoint Live enable customisable captions—participants can adjust text size, colour, and even translate it into their preferred language.

At Nexer, we use these tools for internal work, remote workshops with clients, and even livestream sections of events like at Camp Digital. These capabilities enhance communication and ensure inclusivity across various scenarios.

Error Detection and Correction

Web accessibility remains a pressing challenge, affecting individuals navigating digital products and services, and should be a priority for those of us designing and building them. Despite the presence of automated tools, the Million report by experts at WebAIM reveals an alarming average of 56.8 distinct accessibility errors across the top one million homepages. Indeed, it’s estimate that automated tools can miss up to 70% or more of accessibility issues, which can otherwise go undetected without manual testing.

As such, resolving accessibility issues can be arduous. It demands expertise in both accessibility principles and your website’s intricacies. Communicating these issues effectively to developers or agencies—especially those inexperienced in accessibility—is equally crucial.

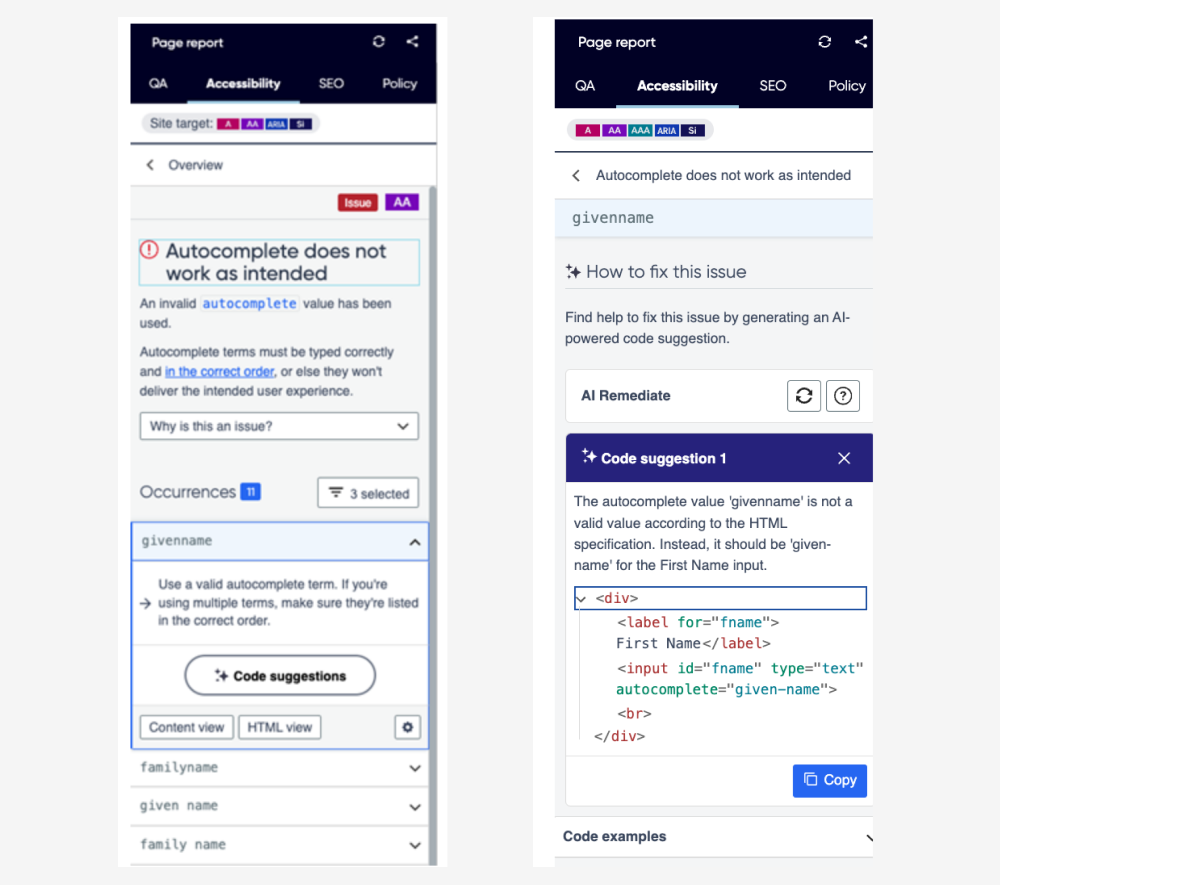

AI supports web developers by providing support to identify and correct web content accessibility issues. From improper HTML tag usage to missing alt text for images, AI tools enhance overall accessibility. Platforms like Siteimprove offer AI-driven auditing and improvement features. Their recent release, AI Remediate, not only detects issues but also generates fixes. Alongside AI-recommended code suggestions, it provides clear explanations of the problem and the necessary changes.

Two images show how Siteimprove's AI Remediation Tool flags accessibility issues and offers generic code to resolve the problem.

While AI within overlays and dynamic content adjustments can offer temporary fixes by adapting in real-time to user needs, they are no substitute for proper code-level implementations. AI at the code level ensures that accessibility is fundamentally built into the structure of websites and applications, providing a more reliable and consistent experience for all users. Overlays often mask underlying issues without truly resolving them, potentially leading to a false impression of accessibility compliance. Prioritising AI-driven solutions at the code level is far more appropriate and effective in creating genuinely inclusive digital environments.

Cognitive Assistance

Cognitive assistance refers to AI-powered tools that aid individuals with cognitive disabilities in managing schedules, offering reminders, and guiding users through tasks. For example, people with memory challenges can benefit from AI-driven reminders for daily activities, medications, or appointments.

As workplaces become more inclusive, additional support is necessary. Employees with cognitive disabilities often struggle with time management, task organisation, and memory retention. AI can bridge this gap by providing personalised assistance tailored to individual needs. Microsoft Copilot is a great example of this concept, assisting users in various tasks and enhancing productivity and accessibility. Whether it’s managing work schedules, setting reminders, or providing step-by-step guidance, Copilot contributes to a more inclusive and efficient work environment.

AI tools like Copilot and ChatGPT also offer recommendations and analysis of text, helping to reduce the cognitive load of the user. Features like Copilot’s Rewrite tool can support non-native speakers by proofreading, correcting grammar, and helping with vocabulary.

Recently in our own team we’ve been exploring how Copilot specifically can help us create more inclusive workplaces and facilitate great collaboration and meaningful productivity. Read about what we've learnt from our experimentation with Copilot.

Real-Time Sign Language Translation

AI systems hold the potential to revolutionise communication between deaf and hearing individuals by translating sign language into spoken or written language, and vice versa. These systems are capable of operating in real time, facilitating seamless interactions.

Sign languages exhibit regional variations, nuances, and context-dependent meanings, presenting significant challenges for AI models to achieve accurate translation across these complexities.

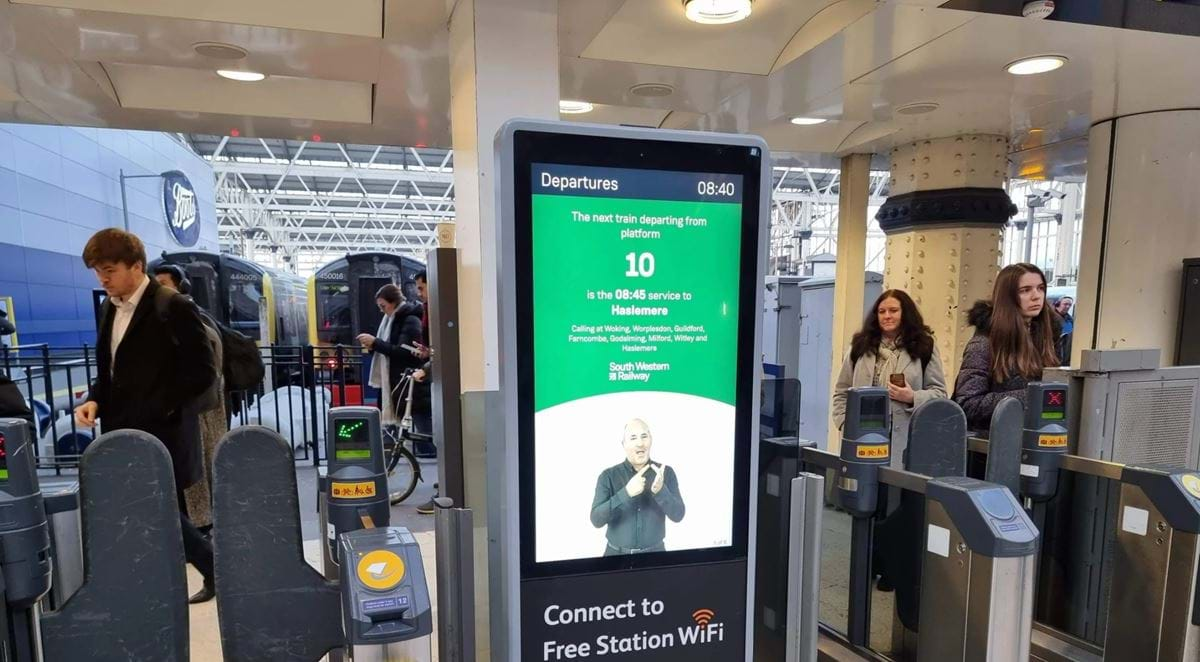

In an exciting trial, London Waterloo is leveraging AI to provide train information through British Sign Language (BSL). This initiative aims to enhance accessibility for deaf passengers, ensuring they receive essential updates in a language they understand.

A screen shows an AI sign language interpreter, situated beside the ticket gate in a train station.

In Summary

Artificial Intelligence (AI) has the potential to play a pivotal role in making our society more inclusive. While current iterations face contextual challenges and variability, real-world applications are already making significant impacts. Apps like Microsoft’s Seeing AI empower visually impaired users, and live captions enhance communication on platforms like Microsoft Teams. AI-driven tools like AI Remediate simplify the detection and correction of web accessibility issues, and innovations in real-time sign language translation promise to bridge communication gaps.

These advancements, alongside trials such as London Waterloo’s BSL train information system, hint at a future where AI seamlessly integrates into our daily lives, fostering greater accessibility in both digital and physical spaces. By continuing to innovate and celebrating these successes, we move closer to a world where everyone truly belongs.

Get in touch

If you would like to work with our team on your project, email us at hello@nexerdigital.com or call our Macclesfield office on +44 (0)1625 725446